Scaling Time-Series Classification in Big Data

Project information

- Category: Big Data Technologies - Apache Spark

- Done for: University Module

- Project date: 2024

- Project URL: https://github.com/Daniel2tio/Scaling-Time-Series-Classification

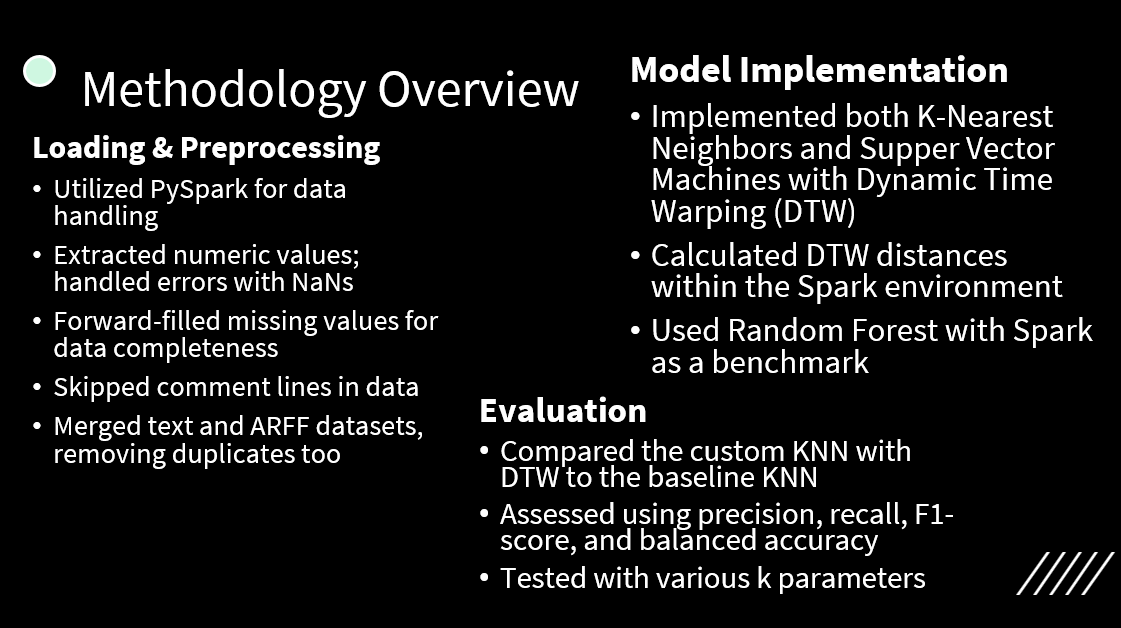

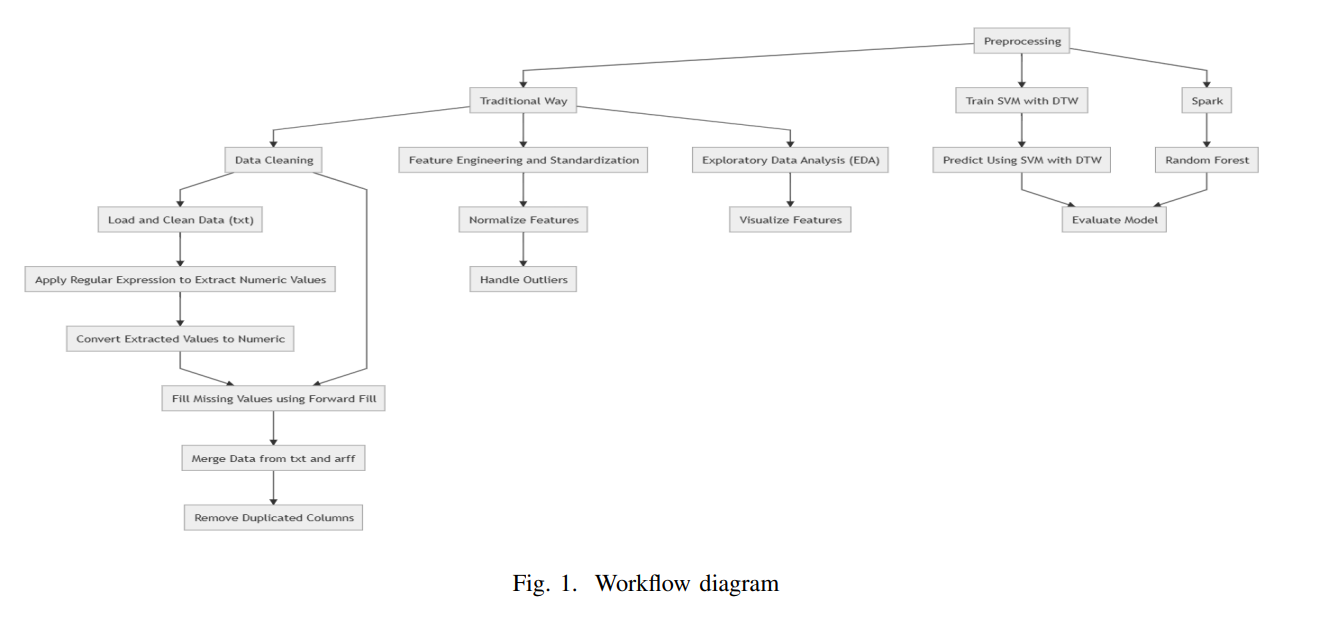

This study addresses the challenge of efficiently classifying large-scale time series data using traditional methods like KNN with Dynamic Time Warping (DTW) and SVM with DTW, alongside scalable models like Random Forest, and leverages PySpark for parallel processing to enhance scalability and performance in Big Data analytics.

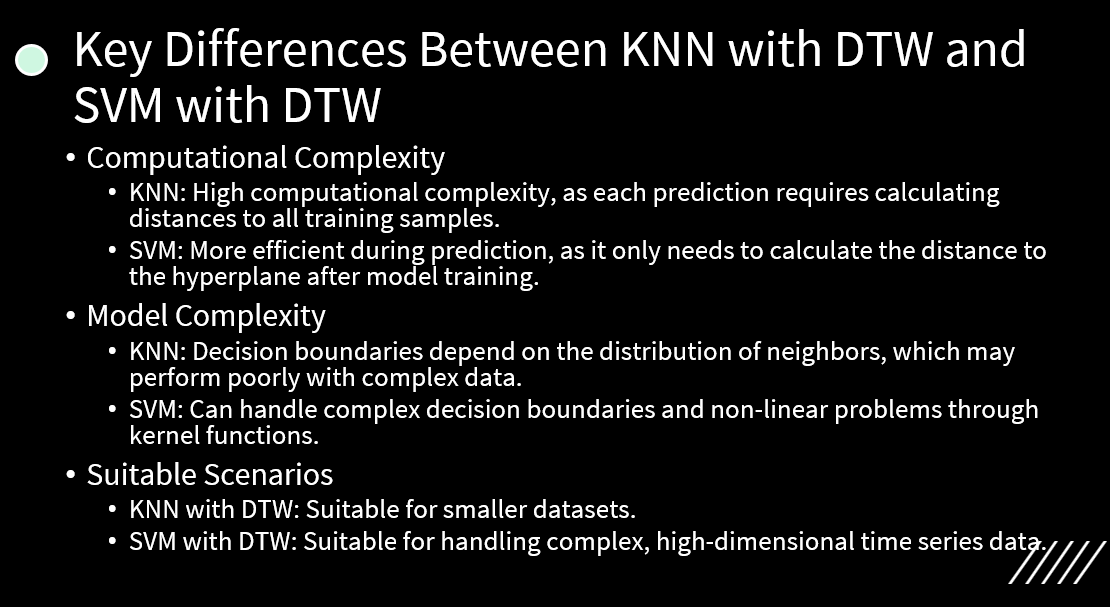

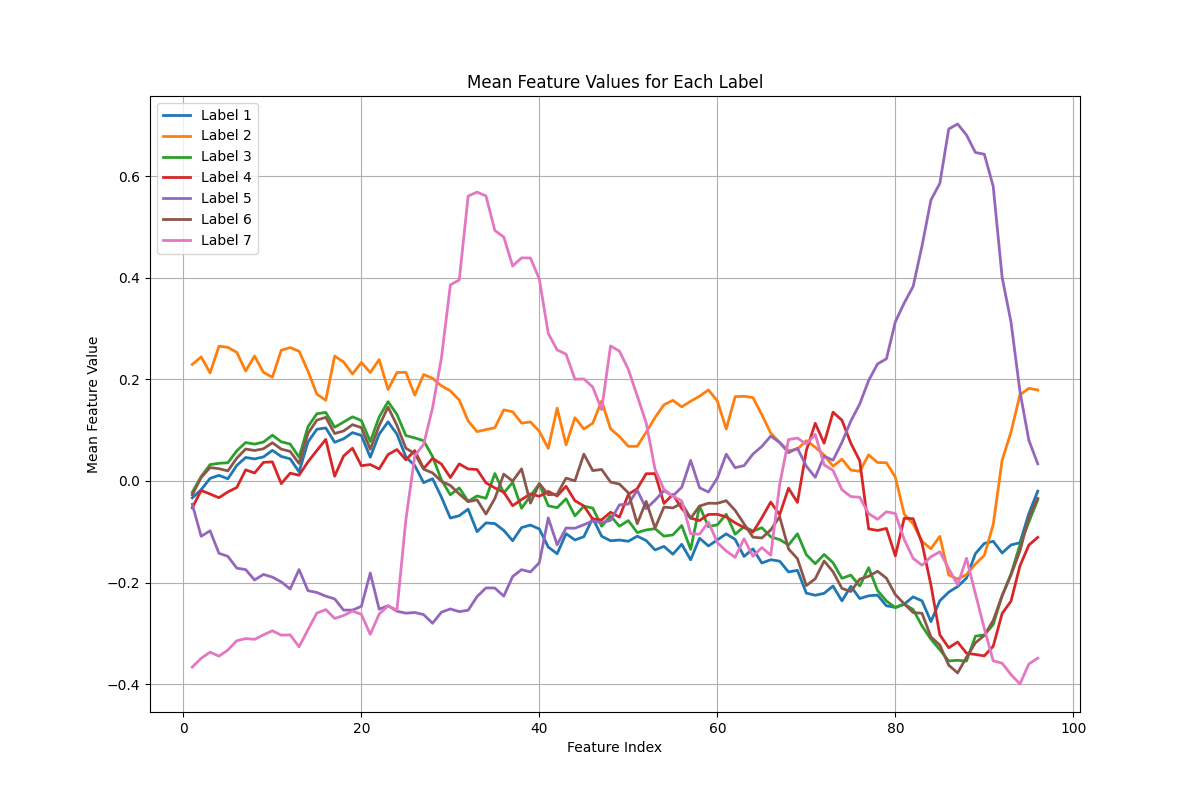

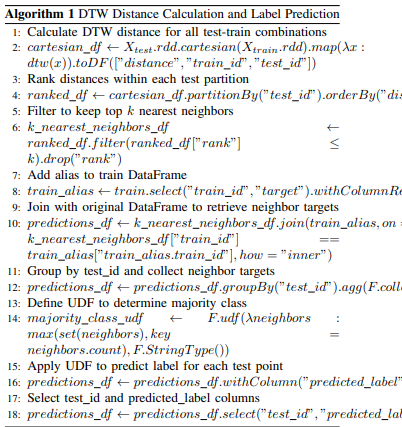

Our experiments revealed important insights into the scalability of these methods. KNN with DTW, while effective in capturing temporal dependencies, exhibited limitations as dataset sizes increased. The computational demands associated with pairwise DTW distance calculations posed challenges in terms of processing time and memory utilization. In contrast, SVM and Random Forest demonstrated better scalability characteristics, leveraging kernel methods and ensemble learning techniques for efficient handling of high-dimensional data. Notably, despite the advantages in scalability, it’s essential to acknowledge the sub optimal accuracy scores obtained from all models, reflecting the complexity of the dataset and the inherent challenges in time series classification. The confusion matrix analysis revealed significant misclassifications across different classes, emphasizing the difficulty in capturing distinct patterns accurately within this context. One notable finding was the computational efficiency of scikit-learn’s KNN implementation with PySpark DataFrame compared to the custom KNN with DTW approach. The scikitlearn model exhibited significantly faster execution times, highlighting the optimization potential of distributed computing frameworks like PySpark. Moving forward, there are several avenues for improvement. Exploring deep learning models like N-BEATS for time series classification within a distributed computing environment could offer enhanced scalability and efficiency. Additionally, leveraging techniques such as Locality Sensitive Hashing (LSH) with KNN could expedite nearest neighbor searches and mitigate scalability challenges associated with large datasets.